The GFS and its global data assimilation system is based on decades-old science and technology, resulting in U.S. global weather prediction lagging behind leading groups such as the European Center for Medium Range Forecasting (ECMWF) and the UK Met Office (UKMET). GFS is also a hydrostatic model, meaning it is not suitable for the higher resolutions that are the clear direction of global weather prediction.

The latest verification (in this case the global 5-day forecasts at 500 hPa, shows the European Center (red triangles) to be consistently superior than the U.S. GFS (black line)

The National Weather Service admits the GFS needs to be replaced. During the past year or so, the National Weather Service, using money provided by Congress after the relatively poor Hurricane Sandy forecast, has sponsored a model "bake off" between potential replacements. The project, called NGGPS, the Next Generation Global Prediction System, has now narrowed down the selection to two possible models (also known as dynamic cores). And the differences between these candidates could not be more stark.

The first, MPAS, Model Prediction Across Scales, was developed by the main national research center of the atmospheric community, NCAR (the National Center for Atmospheric Research). It is an innovative model that can accurately predict weather on all scales, allows variations in resolution to produce finer resolution where needed, and is very efficient in using computer resources.

The competitor is FV3 (Fine Volume Cubed), developed by a NOAA lab (Geophysical Fluid Dynamics Laboratory). As we see, the model does poorly at high resolution and is not a community model.

This blog will make the case that picking FV3 would be a disaster for U.S. weather prediction and NOAA, ensuring that NOAA would maintain its current isolation from the research community and the second class status of the U.S weather prediction enterprise. In contrast, selection of MPAS would revolutionize U.S. numerical weather prediction, joining NOAA and the research community to build a world-class capability.

But I am worried. It appears that some NOAA/NWS management is leaning towards the in-house solution, even though the negative implications for U.S. weather prediction are profound.

Model Structure: Very Different Approaches

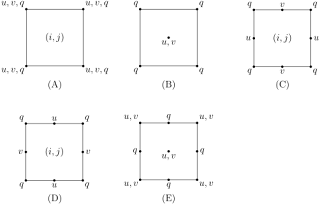

A new global model has to solve a number of problems. Traditional models solve the equations describing the atmosphere on a latitude-longitude grid (see below), but this causes severe problems near the poles, where the points came closer and closer together. Expensive fixes are needed that slow the model and make it less accurate.

Scientists at NCAR took a fresh approach to the problem and came up with an innovative solution: instead of a grid of squares/rectangles, why not using hexagons? Then there is no problem with the poles and it turns out that this approach increases the accuracy of the simulations. But the NCAR folks did not stop there, they built in a capability to embed smaller hexagons in any area of choice, allowing the resolution of the simulation (the distance between the centers of the hexagons) to increase where it is needed (in this case over the U.S.). A very useful capability.

The NOAA scientists at GFDL decided on another approach, called a cubed sphere, in which they retained a traditional grid structure, but divided the earth into a series of separate grids that mesh at the seams. There is overhead and numerical accuracy issues with such an approach. To get more resolution in specific areas, FV3 uses a traditional nesting approach, in which a higher resolution grid is run over a smaller domain, a method that brings issues at the grid interface.In short, the MPAS approach is superior; a conclusion reached by others, such as the modeling scientists at the Earth Systems Research Lab (ESRL), which developed two models call FIM and NIM that also used the hexagonal shapes.

Model Variable Structure

This is going to get a bit technical, but the point will be critical. Model variables, such as temperature, pressure, and winds can be distributed in the models in various ways. MPAS uses the highly accurate "C" grid, while FV3 uses the substantially less accurate "D" grid, which results in a much smoother solution.

Between this grid structure and the larger-scale structure noted above, MPAS can provide much MORE detail for the same resolution (distance between the grid points in the model). In fact, analysis of simulations by MPAS and FV3 for identical cases, revealed that MPAS has at least TWICE the "effective" resolution than FV3, and probably a lot more. FV3 has real problems defining smaller scale features at high resolution, a critical requirement for future forecast applications. Let me demonstrate this.

In the center panel below is a radar image for 0000 UTC 5/21/2013. You will note a narrow line of thunderstorms from Texas into western Illinois. A 72-h MPAS forecast at 3-km spacing is impressive. Good structure, with a small eastward displacement of the line. But the much higher resolution FV3 (a grid space of 1.3 km) is having real problems, with convection being too broad and discontinuous. This is not unusual behavior for FV3 and expected using the diffusive "D" grid.

Speed

Supporters of FV3 like to note that FV3 is faster than MPAS (about twice as fast at the same grid spacing). But this is a false argument. MPAS has at least twice the effective resolution at FV3 at fine resolutions. In order to double the horizontal resolution of a weather forecasting model, requires about 8 times more computer resources. So in terms of effective resolution, which is what really counts, MPAS is probably at least FOUR TIMES FASTER than FV3. Maybe more.

Community Modeling and NOAA/NWS Isolation

If there is one reason why the National Weather Service has fallen behind in numerical weather prediction, it is its intellectual isolation from the vigorous and large U.S. weather research community. In the 80s and 90s, the NWS went its own way in the development a national model (first the NGM and then NMM) rather than use the superior models developed at NCAR (MM5 and WRF). The result were inferior forecasts. By picking FV3, the NWS would be making the same mistake again, going its own separate way, while NCAR and the research community go another.

Pick MPAS, and the NWS not only gets a superior model, it will team up with thousands of scientists connected with NCAR and those using NCAR models, resulting in far faster development and extensive testing by a large outside community.

Summary

The National Weather Service is now at a crossroads for global weather prediction. It can go with an in-house model developed within NOAA (FV3) But it is an inferior model that is not well suited to deal with the high-resolutions expected in the near future. A model that provides less simulation "bang" for the computer resource "buck." A model that will AGAIN leave the NWS isolated from the vast U.S. research community, repeating a mistake that resulted in NOAA weather prediction descending into third rate status.

Or it could do something very different. Choose NCAR's MPAS model and join forces with NCAR and the academic community. MPAS is a superior model in almost all ways and will provide a robust global modeling platform for the next several decades, a role for which the GFDL FV3 is ill-suited.

One choice leads to greatly improved weather prediction, the other stagnation and isolation. I hope the NWS chooses wisely.

_____________________________________

Announcement: Public Talk: Weather Forecasting: From Superstition to Supercomputers

I will be giving a talk on March 16th at 7:30 PM in Kane Hall on the UW campus on the history, science, and technology of weather forecasting as a fundraiser for KPLU. I will give you an insider's view of the amazing story of of weather forecasting's evolution from folk wisdom to a quantitative science using supercomputers. General admission tickets are $25.00, with higher priced reserved seating and VIP tickets (including dinner) available. If you are interested in purchasing tickets, you can sign up here

____________________________________

I was a debate coach so I must ask you to quantify the other position. If you cannot, it is often because that one has convinced themselves prior in a way beyond approach - in other words, with subjectivity

ReplyDeleteETA on model decision? Certainly has been long overdue. The EMCWF is the primary model of choice by veteran forecasters nowadays although getting higher resolution products takes a bit more searching and cost. The beauty of the NWS is the free access to tons of data to the public. With an upgraded model in the pipeline and the transparent access...it will be a new stage of U.S. weather forecasting.

ReplyDeleteHope we get there soon. Thanks for the article.

Both models are hypothetical. Isn't is reasonable to make predictions THEN decide?

ReplyDeleteSuch issues generally have reasonable arguments from both sides. What are advantages of the NOAA model?

ReplyDeleteIt seems to me that the stakes for NOAA/NWS are incredibly high. Presumably, given its open development model, MPAS will play an important role at other organizations concerned with weather forecasting, including those in the private sector.

ReplyDeleteIf that's the case, NOAA/NWS will have nowhere to hide if its new model proves to be consistently inferior. The implications, especially if there's a forecasting failure that results in major property damage or loss of life, seem pretty clear to me.

Excellent stuff, thanks for bringing this to our attention.

ReplyDeleteI was somewhat amused, and horrified, by this blog. I worked in the oil industry for years, some if it in research. We built 3d earth models used to propagate acoustic and electromagnetic waves. We showed years ago that hexagonal sampling is more efficient that rectilinear. In addition, using models that have a variable sampling grid is more, in some cases, much more, computationally efficient, (fine sampling where you need it, course otherwise). It is painfully clear to me that the MPAS approach is the only sensible one.

ReplyDeleteOn the other hand, changing the modeling software to utilize the "new" grid may be costly.

If they do choose FV3, is there any possibility of the weather community/research institutions pooling resources to continue development of MPAS? This would create some competition and also clearly demonstrate the ongoing frustration with NOAA/NWS.

ReplyDelete- Douglas

Politics as usual in DC...

ReplyDeleteIs the computing speed the only argument for the FV3? If it looks likely that NOAA/NWS is leaning that direction, what are the other "pros" that it offers? Cost?

ReplyDeleteI second Bloggods query (top of page)

ReplyDeleteWhat do your peers at NWS say, or are they undr a gag order?

Researchers have been using MPAS since 2013 - there's nothing "hypothetical" about it.

ReplyDeleteMerciful heavens, people actually did numerics on a lat/long grid?

ReplyDeleteNostalgic and amusing to read a discussion of the advantages of hex grids in modeling; at an analog level, war gamers hit on hex grids as a replacement for rectileinear in the mid-1960s, for similar reasons. Games like chess and Go had made good use of squares; but both are abstract. In attempting to model the real world, a hex grid has proven far more versatile.

ReplyDeleteEven at the computationally low intensities of a board war game, the rectileinear model compares very unfavorably with hexagonal (consider the problem of representing points of sail and wind direction in a naval game-- squares just don't work.)

But this begs a further question: in gaming, computers allowed us to move to continuous models for things like naval and air simulations, long ago. Vastly more accurate, if not necessarily more fun. Do any such models for weather and climate? What would be the computational demands of continuous weather model? Huge, no doubt -- but I'd have assumed that someone would have ballparked them.

Dr. Mass: If you haven't already read it, there's an interesting book by George Dyson called "Turing's Cathedral" which talks about the early days of computing (largely relating to the H-bomb development). It's rather heavy (IMO) on biographical background, but there's a chapter called "Cyclogenesis" which talks about the first attempts at numerical weather prediction in the late 1940's.

ReplyDeleteThanks for your insights, always a pleasure to read

Is there a place to ask off topic questions?

ReplyDelete