Today there is

an article in the New York Times magazine section by Michael Behar that describes the deficiencies in the National Weather Service numerical weather prediction. Like any media article, it has its strengths and weaknesses, but its basic thesis is correct:

U.S. numerical weather prediction (NWP), run by the National Weather Service and NOAA (National Oceanographic and Atmospheric Administration), could provide Americans with far better weather forecasts, but only with substantial changes in organization and how it interacts with the large U.S. weather prediction community.

This blog will outline

some of the current problems and provide specific recommendations on how the situation could be improved rapidly.

Why should you consider what I have to say? My research is on numerical weather prediction and I have run an operational NWP center for two decades. I have thought about this issue for many years and have written papers in the peer reviewed literature about it. What I am saying is consistent with a long list of National Academy reports and the conclusions of national workshops. I have served on advisory committees to the National Weather Service and my recommendations are similar to those of other national experts with whom I have served. I know all the major players. And what I will describe below is based on hard facts, including objective verification scores and peer-reviewed research.

Why should you care? Numerical weather prediction is the foundation of all weather forecasts. Good weather forecasting saves lives, protects property, and makes our economy more efficient. Small improvements in weather prediction is worth billions of dollars. Large improvements, far more.

My essential conclusions are these: the U.S. government should have the best numerical weather prediction in the world, but doesn't. The essential problem is poor organization and a lack of strategic planning in NOAA/NWS, coupled with isolation from the research community and a willingness to be second rate. Groups outside of NOAA have contributed to the current mess. The deficiencies in U.S operational NWP could be fixed in a few years if the right steps were taken.

An Important Point. What I am going to describe is

not a personal criticism of NOAA/NWS scientists and managers, most of whom are technically adept and highly motivated. The NOAA/NWS numerical prediction

system is essentially broken and only substantial reorganization can fix its persistent decline. It will take Congress and NOAA/Dept of Commerce leadership to put U.S. weather prediction back on track. Doing so would have enormous benefits in saving lives, protecting property, and enhancing the economy of the U.S.

A Very Complex Technology

Numerical weather prediction may be the most complex technology developed by our species, requiring billions of dollars of sophisticated satellites, the largest supercomputers, complex calculation encompassing millions of lines of code, and much more. The difficult nature of this technology makes it difficult for the media to understand the problems and problematic for Congress to intervene to improve the situations. Currently, there are several bills in Congress directed toward improving U.S. weather prediction, but most of them do not solve the fundamental issues. Congressmen/women don't know where the skeletons in the closet are located.

This blog will tell them where to look.

_______________________________

The Problems

The deficiencies in U.S. numerical weather prediction (NWP) can be best analyzed by dividing the issues into global scale and national/regional scale prediction. U.S NWP is done at the Environmental Modeling Center (EMC) of NCEP (National Centers for Environmental Prediction), which is part of the National Weather Service, which in turn is part of NOAA, which is in the Dept. of Commerce.

Global Forecasting

The GFS lags behind

The fact that the U.S. global prediction model (GFS) is inferior to both the European Center (ECMWF) and UKMET office models has gotten a lot of press, starting with Hurricane Sandy. The latest verification scores (5 day, 500 hPa, global, graphic below) over the past month show the story: ECMWF is best (red), UKMET is in second place (yellow), and the US GFS is third (black), essentially tied with the Canadians (CMC, light green). You will notice that the US model occasionally has serious skill declines (dropouts), with substantial loss of skill.

A serious impact of the problems with the US model is its inferiority with track forecasts of tropical storms, as illustrated by the track error for Hurricane Matthew. At five days, the US GFS had a track error of 250 km, while the ECMWF and UKMET had errors of 100 km. A real problem.

The poor performance of the GUS was perceived as sufficiently serious that the US Air Force decided to replace the GFS with the UKMET model.

The GFS is an old model, with inferior physical parameterization (how physical processes like convection, moist processes, radiation, and others, are described. It also has inferior data assimilation (how observations are used to prepare the initial state of the model forecast). The National Weather Service knows it has a real problem and is now working on securing a replacement global model through their NGGPS (Next Generation Global Prediction System) effort. But as I described in previous blogs, their tests were not forward looking, mainly using the old physics and the model resolution of today, rather than the situation of the future. Recently, they picked a new

dynamical core for their new global model (GFDL's FV3), but have been slow to come up with plan to make it a community model and to add physics and data assimilation components.

Recently, a private sector firm, Panasonic, announced that it has taken the NWS GFS model and made modest improvements in its physics and quality control, leading to substantially better forecasts than the National Weather Service. If confirmed, this modeling advance would be an indictment of the NWS's slow evolution of the US global model.

The CFS uses large amounts of computer power, but provides little benefit

A less publicized problem for global modeling at NWS's NCEP is their long-range model called the Climate Forecast System Version 2 (CFSv2). Run 16 times per day (with runs ranging from 45 days to 9 months), the CFS is meant to provide subseasonal (few weeks to a month) and seasonal predictions. Unfortunately, this modeling system (which requires substantial computer sources to apply) has very little skill past 3 weeks and is inferior to inexpensive statistical approaches at for all but the shortest forecasts. I know a lot about CFS performance because I have had a research grant (thanks to the NWS) to evaluate it.

As shown below, the errors of CFS (for the globe, the tropics, and the midlatitudes) in the middle troposphere (500 hPa) grow rapidly 1-3 weeks into the future, and by 3 weeks virtually all skill is lost (climatology, what has happened on average in the past, is superior at longer ranges).

CFSv2 has failed to forecast nearly every major seasonal and subseasonal (3 weeks and more) event over the past several years. Last year, it predicted southern and central CA would be wetter than normal (see below), which was wrong. It failed to get the key pattern of the last few years, with a ridge over the western U.S. and a trough over the east.

CFS is a modeling system that is low resolution and on a very slow upgrade cycle. At extended ranges, inexpensive statistical approaches (like correlations with El Nino) are superior. It is wasting huge amounts of computer time and providing problematic forecasts to the country. NOAA /NWS management should pull the plug on this system and evaluate whether a better approach is possible. I believe that the Achilles heal is its coarse resolution, which fails to simulate the effects of convection (thunderstorms) correctly.

Falling Behind the European Center on Global Ensemble Forecasting

The future of forecasting is in ensemble prediction, running models many times to explore uncertainty in the the predictions. Ensembles allow the estimation of probabilities. Larger ensembles are better than small ones, since they foster the exploration of uncertainty better. Unfortunately, in global ensembles, the National Weather Service badly lags the European Center (ECMWF). Today, the NWS ensemble runs with 21 members at 35 km grid spacing (the distance between the grid points at which forecasts are made) and is called GEFS. ECMWF runs 52 members with TWICE the resolution (18 km grid spacing).

ECWMF ensembles are thus inherently superior, able to provide much more detail regarding weather features and providing a better idea of uncertainty. In major event after major event, members of the ECMWF ensemble catch on to observed changes in weather systems before the US GFS ensemble and is generally superior. For example, here are forecasts of the two ensemble systems for Hurricane Matthew, with the ensemble forecasts initialized on October 3. The European Center forecast tracks (left side) has a far greater tendency to take the storm offshore compared to the US GEFS (right). The EC ensemble was correct.

The irony in all this is that with the recent computer upgrade, the National Weather Service has the computer power to catch up in the critical arena, but chooses not to. The ECMWF plans on running an ensemble with an amazing 5-km grid spacing in 2025. The NWS has no plans that I know of to be even close.

National and Regional Modeling

In addition to global modeling, the National Weather Service runs higher resolution models over North America with the goal of aiding forecasting of smaller scale features like hurricanes, thunderstorm, terrain-forced weather features, coastal effects, and the like. Unfortunately, the situation for NWS high resolution numerical weather prediction is at least as problematic as the global model, with one stellar exception, the High Resolution Rapid Refresh (or HRRR). As we will see, the NWS is running a huge, and unwieldly, array of models, domains, and systems for high-resolution forecasts.

The NWS runs two major modeling systems at the regional/national scale: NMMB and WRF. NMMB (also called NAM), their main high-resolution modeling system, was developed in house and is used by few outsiders, while WRF, developed by NCAR, is a community modeling system and is used by many thousands of groups, individuals and institutions.

There are two major problems with NAM: verification scores and a number of research papers have shown it is decidedly inferior to WRF. Worse than that, there is overwhelming evidence that NAM is inferior to the GFS, the NWS's global model. I can demonstrate the latter by quoting from two papers in the peer-reviewed literature, the first from Charles and Colle (2009) and the second from Yan and Gallus (2016). I could supply a dozen more papers saying the same thing, includes ones I have co-authored.

At the UW we verify major modeling systems over the Northwest and have done so for over a decade; as shown by the error statistics presented below, NAM has much larger errors than GFS. My conversations with NWS forecasters all over the US confirm that GFS is generally far superior than NAM.

When NOAA ESRL did their own evaluation of WRF versus NAM for use in their new Rapid Refresh system (making forecasts every hour!), there evaluation found that NAM is not as good as WRF.

So why is the National Weather Service running an inferior modeling system? Inertia and the wish to keep their own proprietary system. They try to rationalize this up by saying that NAM is good for an ensemble system, but that is really not true because far better model diversity can be acquired without using an inferior system. Clearly,

NAM should be dropped in favor of WRF, which is not only superior in design and verification, but takes advantage of the creative energies of thousands of individuals and groups.

But it is worse than that. The NWS is running a large collection of domains and runs--- national forecasts, forecasts for 1/3 of the U.S., and many more. Some with NMMB, some with WRF. A confusing mess.

But I am only warming up with NWS national modeling problems. The NWS runs a higher-resolution ensemble forecasting (called SREF, Short Range Ensemble Forecast System) with 16-km grid spacing. SREF uses both WRF and NMMB members, but that causes a big problem--the forecasts divide into two separate groups, which is NOT what you want in an ensemble system (which is supposed to be sampling from the true uncertainty of the forecast). The US SREF is roughly the same resolution of the European Center GLOBAL ensemble system, which is also much larger.

NCEP SREF forecasts tend to separate into two families of solutions, one for the WRF members (warm colors) and other for the NAM members (cool colors). A plot of cumulative precipitation is shown in this figures.

A rational decision would be to drop the NWS SREF and increase the resolution of the US global ensemble system to 15-20 km resolution.

But if you want a real problem, consider the tens of millions of dollars spent on the HWRF system. For years, hurricane track forecasts improved, but intensity forecasts did not. After the substantial intensity forecast errors during major storms in 2005, Congress gave the NWS about 100 million dollars to deal with the hurricane intensity problem. So the NWS developed HWRF (under the HFIP effort), using its inferior regional modeling system (NMM) as the core. After spending tens of millions of dollars on HWRF, it went operational earlier in this decade. The problem is that HWRF does a poor job on track forecasts, generally being worse than global models like the ECMWF, something demonstrated by the Matthew example shown above. Official verification statistics from the National Hurricane Center back this up (see below). If you can't get the track right, the intensity forecasts are not very useful.

My colleagues who are hurricane modeling experts feel that HWRF was a poor investment and one that had little future. In fact, the National Weather Service made the decision to stop most investment in HWRF last year.

But as worrisome of all the above issues, perhaps no deficiency is so important as the lack of a high-resolution ensemble system over the U.S. Specifically, the US needs to use convection-allowing resolution (3-4 km grid spacing) for an ensemble of 30-50 members, which allows probabilistic prediction of thunderstorms, terrain and coastal effects, and smaller scale storms. A number of National Academy Committee reports, advisory committee reports, major national workshops, and user requests have asked the NWS to do this, but they have pushed it off. Instead, NWS Storm Prediction Center has kludged together a very small

Storm Scale Ensemble of Opportunity (SSEO) by acquiring forecasts from available high-resolution simulations. Not very good, but better than nothing.

Embarrassing for the National Weather Service, NCAR has gone ahead and built a small, high-resolution ensemble (10 members, 3-km grid spacing) to prove the concept (see below). This has become a national hit and is used heavily by NWS forecasts. However, this test will end next summer.

If the U.S. is EVER going to provide state-of-the-art regional and local forecasts, a high resolution ensemble system must become operational. With the new computers, the NWS has enough computer resources to get started, with perhaps 3-8 members, and then can make the case for more computer power to do it right. Instead they have done nothing.

Finally, the National Weather Service has one great recent success: its High Resolution Rapid Refresh (HRRR) modeling system that provides high-resolution (3-km grid spacing) analyses and forecasts every hour. HRRR, which assimilates a wide variety of local weather data assets, is a real game changer, providing continuous updated forecasts.

Computers: Not Using the White Space

The National Weather Service is in a strange place with computer power. It needs 10-100 times more computer power to do what it should, but it is failing to use the computer resources it possesses. Why does the NWS need so much computer power? First, they need to run a high-resolution (say 3 km grid spacing) ensemble over the US with at least 30-50 members. They need to use better physics in their models (which is expensive). They need to run a large global ensemble at 15-20 km grid spacing and to experiment with running ultra high resolution global forecasts (3-4 km).

Earlier this year, the NWS acquired a much larger computer (in fact two computers!) from CRAY, providing them roughly 2-3 petaflops of maximum throughput. Roughly the same as what ECMWF has today. But right now, NCEP's EMC is only using about 35% of this computer resource, with the unused portion termed "white space". This is illustrated by the attached figure, which presents the current usage of the operational machines. This is very bad on multiple levels: First, they are not providing the quality of forecast products they could. Second, it is hard to ask for more computer resources when you aren't using what you have.

Post-processing: The Other Major Gap

After numerical weather prediction models are run, their output provides a description of the future state of the atmosphere. One could use those predictions without alteration, but it is possible to do much better than that through

post-processing, a procedure in which numerical forecasts are improved using statistics. For example, if previous model forecasts at a location were systematically too warm by a few degrees, one can adjust the model forecasts downward. Such a procedure can be done for all weather variables (such as wind, humidity, and precipitation). Post-processing can greatly improve model forecasts.

The National Weather Service has done post-processing for over a half-century, using an approach called

Model Output Statistics (MOS). The problem is that they are still basically using the same approach applied in the 1970s, while the science/technology of post processing has become much more sophisticated. For example, the Weather Channel, weather.com, weatherunderground, and Accuweather used a much more sophisticated approach originally developed by the National Center for Atmospheric Research (NCAR) called DiCast. In this approach, the final forecast depends on statistically massaging a large number of sources of weather forecasts and observations. Using inferior post-processing,

the public forecasts provided by the National Weather Service are substantially inferior to the private sector firms notes above, something the media has not caught on to yet.

Want proof? A website,

forecastadvisor.com, evaluates the forecasts at major U.S. cities. Here are the results for Seattle and New York. The NWS forecasts are far behind. Choose your own city, the results will be very similar.

Only today is the National Weather Service starting to experiment with a better approach using more types of forecast inputs (the

National Blend of Models), but this work is going glacially slow and not available to the public. And there are other new approaches, like

Bayesian Model Averaging (BMA), which is being used by other countries, like Canada. A group of us at the UW have worked on BMA and proved its value. There was lots of interest in our work by foreign NWP centers, but not a single inquiry by our own National Weather Service.

The Observing System: Poor Hardware Acquisition, Lack of Rational Analysis and Not Using All Available Resources

Observations are the lifeblood of any numerical forecasting system and in this area the NWS has major challenges. Their problems with planning and building weather satellites (the most important observing system) are

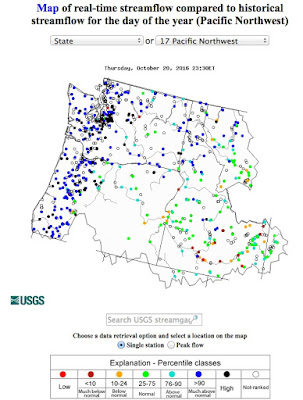

well known, so I won't deal with those. Another issue is the lack of use or minimal application of key new observing systems, such as the Panasonic TAMDAR system on short-haul flights. But perhaps the most serious deficiency is the lack of rational design of the Nation's observing system using numerical simulation approaches called OSSEs--Observing System Simulation Experiments. And the NWS needs to better explore the potential of less expensive satellite assets, like large numbers of GPS radio occultation sensors on cheap micro satellites. The National Weather Service also needs to fill gaps in US radar coverage, such as the huge hole over the coastal zone of Oregon or the eastern slopes of the Cascades of eastern Oregon and Washington. Weather data from drones and smartphones (e.g., pressure) might greatly contribute to forecast accuracy.

TAMDAR data from short-haul aircraft could provide critical weather information in the lower atmosphere.

So What Are the Essential Problems with the National Weather Service Operational Weather Prediction Effort and How Can They Be Fixed?

By now, you are probably tired of reading about how the National Weather Service numerical weather prediction and statistic post-processing has fallen behind. Trust me, I could have written much, much more. What has caused this decline from state-of-the-science and how can we improve the situation rapidly?

I believe the origins are straightforward and correctable, IF Congress or high level administrative managers intervene, armed with knowledge of the "skeletons" in the NWS closet. Here is my take.

The Organizational Structure of US Numerical Weather Prediction is Profoundly Flawed and Must Be Substantially Changed

To help me explain this, study the organizational charts for NOAA and and the NWS shown below. As most of you know, the National Weather Service is part of NOAA. Operational numerical weather prediction is done by the Environmental Modeling Center (EMC), which part of NCEP (National Centers for Environmental Prediction), which in turn reports to Louis Uccellini, the director of the NWS. EMC and NCEP are led by Drs. Mike Farrar and Bill Lapenta, respectively, two individuals for whom I have a great deal of respect . Dr. Uccellini, has deep experience in numerical weather prediction. So how can things being going so wrong?

Because the organizational structure is a disaster.

The individuals who are responsible for operational numerical weather prediction have NO control over the groups responsible for developing new weather forecast models or improving the old ones. In fact, those model development groups ARE NOT IN THE NATIONAL WEATHER SERVICE but rather in NOAA, mainly at the Earth System Research Lab (ESRL in Boulder) and GFDL in Princeton. Not only doesn't the NWS modeling folks control those research labs, but in the not so distance past there were profound tensions between the two groups (which is now over).

Those responsible for numerical weather prediction also have no control over model post-processing, which was found in MDL (Meteorological Development Lab) part of the Office of Science and Technology (OST). Funding and organization of new model development is NOT controlled by those responsible for the modeling in NCEP, but with OST.

It is very difficult. if not impossible, to develop, fund, and implement a coherent numerical weather prediction program with the highly fragmented approach in place in the National Weather Service.

ONE organization and leadership teams needs to be responsible for US operational NWP, from development to research to implementation. Such is the approach used by NCEP's more successful competitors, like ECMWF and UKMET. Creating such a coherent, integrated organization structure needs to be the number one priority of Congress and NOAA management.

One concrete suggestion I will make:

the NWS should center its model development and research in Boulder, Colorado, the de facto home of much of US NWP research. Boulder contains the vast National Center Atmospheric Research and the NOAA ESRL lab, plus many private sector firms. NCEP's EMC could move some of its personnel to Boulder, which is la ocation that is both centrally located and attractive for outside visitors. The Developmental Testbed Center (DTC) in Boulder could play a critical role in model verification, evaluation, and community support.

The National Weather Service/NOAA Needs Both a Strategic Plan and An Implementation Plan for US Numerical Weather Prediction.

You can't make much progress if you don't know where you are going. The National Weather Service NWP does not know where it is going. Most of their initiatives have been

reactive and not the result of coherent, long-term planning. Major hurricanes hit the U.S. (e.g., Katrina), then with Congressional funding they start a Hurricane Forecast Improvement Program (HFIP) which plows funds into an ad hoc modeling system (HWRF). As noted above, this money was essentially wasted on a system that has no future. Hurricane Sandy hits, revealing that US global modeling is inferior, results in more more money (the Sandy Supplement) for computers (which is good), research, and a new global modeling (the NGGPS program).

A lack of strategic and implementation plans is the NWP equivalent of driving blind

The National Weather Service does not have strategic plan for numerical weather prediction, one that lays out the broad outline of how its models and post-processing will evolve over 5- 10 years. Its competitors have such plans. In creating such a plan, the NWS can not work in isolation, but must entrain the advice and concordance of its major partners in the research, user, and private sector communities.

The NWS then needs more detailed implementation plans, that lays out detailed development programs over 2-3 years.

The National Weather Service Needs to End Its Intellectual Isolation

One of the primary reasons that NWS NWP is in relative decline has been its isolation of the very large UW research community, both in academia and the private sector. Community models, like WRF, offer the potential to leverage huge outside investments and a large community of scientists and users. Instead, NCEP's EMC has preferred to use in-house models that inevitably were not state-of-the-science. Importantly, NCEP's greatest recent success (HRRR) made use of the community-based NCAR model.

All future NWS models should be community models, but and the NWS has little experience in this approach. Thus, the NWS should lean heavily on the National Center for Atmospheric Research, which has a proven track record in developing community models. Specifically, the NWS and NCAR should work together to develop the next global prediction model.

A somewhat sensitive issue is that a small subset of folks at NCAR are not enthusiastic about working with the NWS, being burned by the rejection of WRF nearly two decades ago. These NCAR folks need to get over their hurt feelings, and if necessary, NCAR management needs to intervene to ensure NCAR/NWS cooperation in the future, which would be good for both institutions and the nation.

Too Many Models and Unification

The National Weather Service is running too many models, which increases costs and lessens the resources available to move forward on any particular model. Forward looking NWP centers, like the UKMET Office, have moved toward

unified modeling, using the same modeling systems at all resolutions. The NWS should aggressively move in this direction in concert with collaborators such as NCAR.

Second Rate is Not Good Enough

There has been a willingness within NWS management to allow US operational NWP to become second rate. It should not take folks like myself hectoring them from the sidelines. New leaders have taken over key NWS modeling portfolios and they are saying the right things, but the proof is in the pudding, so to speak. Recently, I was talking to a

very high ranking NOAA official who told me I had to understand that they were working on "government time" and that I needed to be patient. Other governments (Canada, UK) and inter-governmental organizations (ECMWF) seem to be working much faster, so I don't find such arguments compelling.

The complacency of NOAA officials in some respects reflects an "American Disease" believing we are destined to remain number one, even without investments and hard work. As shown in many other areas, U.S. scientific/technical leadership needs to be continuously earned or it is lost.

Final Comments

Only reorganization of US NWP development and operations can solve the long-term relative decline of US weather prediction, and it will probably take Congress to make it happen. A detailed strategic plan for US NWP should be developed with the national weather community

In the short term, NOAA/NWS should move all forecast model development to Boulder and work out a long-term relationship with NCAR and the vast US weather research community to jointly develop the next generation global modeling system. Furthermore, the NWS should initiate a high-resolution ensemble system during the next year, end the HWRF effort, and drop the poorly verifying NMM modeling system.