And let's be very clear, I don't mean that NWS forecasts are getting less skillful. They are getting better. But it is clear that the NWS has fallen behind the state-of-the-art and is not producing as skillful or useful products as it could or should.

This blog will consider the role of human forecasters in the NWS and how the current approach to prediction is a throwback to past era. As a result forecasters don't have time to take on important tasks that could greatly enhance the quality of the forecasts and how society uses them.

Let me begin by by showing you a comparison of forecast accuracy of the NWS versus major online weather sites, such as weather.com, weatherunderground.com, accuweather.com, and others. This information is from the web site https://www.forecastadvisor.com/, which rates the 1-3 day accuracy of temperature and precipitation forecasts at cities around the nation. I have confirmed many of these results here in Seattle, so I believe they are reliable. I picked three locations (Denver, Seattle, and Washington DC) to get some geographic diversity. Note that the NWS Digital Forecast is the product of NWS human forecasters.

The National Weather Service forecasters are not in the top four at any location for either the last month or year, and forecast groups such as The Weather Channel, Meteogroup, Accuweather, Foreca, and the Weatherunderground are in the lead. And these groups have essentially taken the human out of the loop for nearly all forecasts.

Or we can take a look at some of the NWS own statistics. Here are the mean absolute errors for surface temperature for forecast sites around the U.S., comparing U.S. forecasters (NDFD), the old U.S. objective forecasting system called MOS (Model Output Statistics) that does statistical post-processing to model output, and a new NWS statistical post-processing system called National Blend of Models (NBM). Lower is better. The blend (no humans involved) is as good or better than the forecaster product for forecasts going out 168 h

12-h precipitation? The objective blend is better (lower is better on this graph).

I could show you a hundred more graphs like this, but the bottom line is clear: human forecasters, on average, can not beat the best objective systems that take forecast model output and then statistically improve the model predictions.

Statistical post-processing of model output can greatly improve the skill of the model predictions. For example, if a model is systematically too warm or cold at a location at some hour based on historical performance, that bias can be corrected. (reminder: a computer forecast model solves the complex equations the describe atmospheric physics)

National Weather Service forecasters spend much of their time creating a

graphical rendition of the weather for their areas of responsibility. Specifically, they use an interactive forecast preparation system (IFPS) to construct a 7-day graphical representation of the weather that will be distributed on grids of 5-km grid spacing or better. To create these fields, a forecaster starts with model grids at coarser resolution, uses “model interpretation” and “smart” tools to combine and downscale model output to a high-resolution IFPS grid, and then makes subjective alterations using a graphical forecast editor.

Such gridded fields are then collected into a national digital forecast database (NDFD) that is available for distribution and use. The gridded forecasts are finally converted to a variety of text products using automatic text formatters.

So when you read a text NWS forecast ("rain today with a chance of showers tomorrow"), that text was written by a computer program, not a human, using the graphic rendition of the forecast produced by the forecasters.

But there is a problem with IFPS. Forecasters spend a large amount of time editing the grids on their editors and generally their work doesn't produce a superior product. Twenty years ago, this all made more sense, when the models were relatively low resolution, were not as good, and human forecasters could put in local details. Now the models have all the details and can forecast them fairly skillfully.

And this editing system is inherently deterministic, meaning it is based on perfecting a single forecast. But the future of forecasting is inherently probabilistic, based on many model forecasts (called an ensemble forecast). There is no way NWS forecasters can edit all of them. I wrote a peer-reviewed article in 2003 outlining the potential problems of the graphical editing approach...unfortunately, many of these deficiencies have come to light.

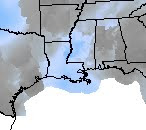

Another issue are the "seams" between forecast offices. Often there are differences in the forecasts between neighboring offices, resulting in large changes at the boundaries of responsibilities...something illustrated below by the sky coverage forecast for later today:

Spending a lot of time on grid editing leaves far less forecaster time for more productive tasks, such as interacting with forecast users, improving very short-term forecasting (called nowcasting), highlighting problematic observations, and much more.

But what about the private sector?

Private sector firms like Accuweather, Foreca, and the Weather Channel all use post-processing systems that descended from the DICast system developed at the Research Application Lab (RAL) of the National Center for Atmospheric Research (NCAR). DICast (see schematic below) takes MANY different forecast models and an array of observations and combines them in an optimal way to produce the best forecast. Based on based performance, model biases are reduced and the best models given heavier weights.

This kind of system allows the private sector firms to use any input for their forecasting systems, including the gold-standard European Center model and their own high-resolution prediction systems, and is more sophisticated than the MOS system still used by the National Weather Service (although they are working on the National Blend).

The private sector firms do have some human forecasters, who oversee the objective systems and make adjustments when necessary. The Weather Channel folks call this "Human over the Loop".

The private is using essentially the same weather forecasting models as the NWS, but they are providing more skillful forecasts on average. Why? Better post-processing like DICast.

Machine Learning

DiCast and its descendants might be termed "machine learning" or AI, but they are relatively primitive compared to some of the machine learning architectures currently available. When these more sophisticated approaches are applied, taking advantage of the increased quality and quantity of observations and better forecast models, the ability of humans to contribute directly to the forecast process will be over. Perhaps ten years from now.

So What Should the National Weather Service Do?

First, the NWS needs to catch up with private sector in the area of post-processing of model output. For too long the NWS has relied on the 1960s technology of MOS, and the new National Blend of Models (NBM) still requires work. More advanced machine learning approaches are on the horizon, but the NWS needs to put the resources into developing a state-of-the-art post-processing capability.

Second, the current NWS paradigm of having a human be a core element of every forecast by laboriously editing forecast grids needs to change. The IFPS system should be retired and a new concept of the role of forecasters is required. One in which models and sophisticated post-processing take over most of the daily forecasting tasks, with human forecasters supervising the forecasts and altering them when necessary.

1. Forecasters will spend much more time nowcasting, providing a new generation of products/warnings about what is happening now and in the near future.

2. With forecasts getting more complex, detailed, and probabilistic, NWS forecasters will work with local agencies and groups to understand and use the new, more detailed guidance.

3. Forecasters will become partners with model and machine learning developers, pointing our problems with the automated systems and working to address them.

4. Forecasters will intervene and alter forecasts during the rare occasions when objective systems are failing.

5. Forecasters will have time to do local research, something they were able to do before the "grid revolution" took hold.

6. Importantly, forecasters will have more time for dealing with extreme and impactful weather situations, enhancing the objective guidance when possible and working with communities to deal with the impacts. 44 people died in Wine Country in October 2017 from a highly predictably weather event. I believe better communication can prevent this.

I know there are fears that the NWS union will push back on any change, but I hope they will see modernization of their role as being beneficial, allowing the work of forecasters to be much more satisfying and productive.

on some of the new roles you project for NWS forecasters in the future, between the latest round of applications (and how I got rejected from the latest openings with conversations), and the mets I know at a few NWS offices, here's how I see your recommendations.

ReplyDeleteNumber 1 won't be an issue for the most part, as long as the mets on duty are paying attention to what is going on and not be too involved elsewhere.

Number 2: for some EM's and officials that would ask for advice, especially in the richer, more populous, and more educated areas; they would be a lot easier to reach, especially with the probabilistic nature of the forecasts. And I don't see much of an issue. But where I see the issue, is in poorer and less educated areas where EM's and other officials just aren't as sophisticated or are wearing multiple hats. The mets will have to take on the role of educators, potentially on the fly in the absence of IWT meetings, in order to get the word out. And more than a few of these managers quite frankly may be more than a bit stubborn and not care a bit for probabilistic products, even if they are better for decision-making. you may see a lot of frustration set in unless there's a big mindset change on both sides. And I don't know how much patience mets and older EM's have.

Number 3, I see that as something mets will take to quite easy, just because humans love to be critics.

number 4: In theory, I agree with you. But if you take most of the forecasting out of the forecasters' hands, I do foresee a lot of complacency setting in, even when the models start to veer off. And with that complacency, I wonder how many mets under this system after a while will even recognize if something is going wrong until its too late. And I could see a lot of clients and the general public going ballistic when it happens (and it would happen numerous times before people got the hint, unfortunately)

as for number 5; some offices already do this, from what I know and from what I have seen from a few recent NWA and AMS papers. but pulling for all offices to do that, it will depend on staffing.

one last thing you didn't mention here, how do you see the staffing levels at the resulting offices and office duties? will they go up to the staff numbers they were supposed to be when reorganization (which offices haven't seen for years)? would these revised operations actually cause congress to think office staff could be reduced (even though it really can't, because of what would be needed in surge capacity, and it would be really short-sighted to add more stress than already takes place for 24/7 offices, especially with recent developments where the stress of under-populated offices in 24/7 operations is causing a lot more mental illness [including, but not exclusively, clinical depression ])? And if we are talking more training or a bunch of re-training to do this, will salaries actually match the extra cost of the advanced education and training (aka debt service of education loans, which can't be wiped out in bankruptcy like other debt)?

This post dealt with forecasting of two variables, temperature and precip, at local levels. Not hurricanes, not tornadic supercells, not mesogamma-scale freezing-rain/snow transitions.

ReplyDeleteYet something like this easily can be misinterpreted, or weaponized by management and politicians in a misleading and overgeneralized way, to say that all human forecasting is outdated.

Nothing can be further from the truth, especially with complex, multivariate phenomena such as hurricanes, severe local storms, and winter weather, locally and nationally -- and days out, not just hours. [In fact, the mention of IFPS is outdated; that tool has been gone from local forecast offices for many years.]

There still is a place for the art of meteorology within the science, for the right brain, and for human *understanding* of phenomena. Models do not *understand*; they simply compute. And in doing so, they still often compute wrongly, especially with multivariate phenomena. Errors occur sometimes due to deficiencies in physics or post-processing, but most often when garbage in (lack of more spatiotemporally dense and accurate observational input) produces garbage out. Physical, conceptual understanding of the atmosphere, combined with keen and detailed diagnostic practice, arms the forecaster with tools needed to give value above and beyond models...again, especially for complex and multivariate processes.

All that said,

1. Day-4 grid forecasting of simple single variables like temperature and dew point is a futile endeavor in a cost/benefit sense, since those few highly specific and localized cases where the humans *consistently* can get them more right tend to average out fast in the bigger wash nationally;

2. Human forecasters do hasten their own obsolescence, and automation, by blindly parroting model output for features with which models still do struggle. This can happen via personal scientific atrophy (including loss of diagnostic skill), overburdening of workload (mission creep) by management that takes away human-analysis and situational-awareness time, or a combination of both.

As for the need to ensure keen, well-practiced human understanding to override autopilot whenever necessary, I offer three words: Air France 447.

I'm a big fan of Weatherbell Analytics, and Joe Bastardi.

ReplyDeleteGreat job Cliff. I completely agree. Much of the work done in the local office is spent on managing product creation. Granted IFPS takes up a majority of the time, but even at that there are a myriad of additional text based products, and now social media requiring a lot of editing time. Let the computers do the long range forecasting and long range social media stuff. The NWS Forecaster need to be let back to realtime "Now" weather, where it counts at the local level. Besides the obvious hurricanes, tornados, and severe storms, one area that needs attention is monitoring of atmospheric conditions that lead to excessive rainfall and surface landresponse to rainfall at a fine resolution accounting for topography. But that is another discussion.

ReplyDeleteWell-put, Doc. But NWS has all those people, what to do with them? They are needed for warnings and emergencies and so are put to work forecasting assuming fair-weather conditions, just for something to do, in effect. I was quite happy to let the models do the work beyond day 1 or 2. We really made our money on the first 6 hours. So long as NWS has sole warning responsibility, they'll need the staff.

ReplyDeleteCliff, thanks for posting. Every semester, I require the seniors in my required synoptic (fall) and mesoscale (spring) courses to participate in a semester-long forecast contest, involving forecasting of 24h max and min temperature, and categorical precip and snowfall at Des Moines, IA, and a city I choose based on challenging weather conditions. When we do verification, I always include the NWS values because I thought that would be a lofty target to which students could aim. However, after doing this for many years, I was surprised that the NWS often ends up well down in the pack. In the last 5 years, an average of 45% of the students score better. In the last 5 semesters, the figure has been closer to 60% of the students beating the NWS forecasts. I end up using this to encourage the students not to just parrot what the NWS is saying, but to trust their own abilities. I also try to avoid giving the students too negative a view of the NWS, by explaining that one reason they can outperform the NWS is that the NWS forecasters get tasked with doing lots of other activities, so this shows the power of being able to devote one's attention to just a few specific sites.

ReplyDeleteThanks Bill.... same thing with our local forecast contest. I don't think it is well known that the typical forecast is better from weather.com than the NWS.... and it is a sign to NWS management that change is needed. Both the backend (models/postprocessing) and front end (what NWS forecasters do) need to change..cliff

ReplyDeleteThanks for your continuing role as an honest broker, continuing to shine light on the NWS. Data you present clearly show that macine post processing results in lower mean error than human post processing. In a control system, one frequently wishes to minimize maximum error rather than mean error. Are there data on max error performance of human and machine post processing?

ReplyDeleteJust a thought - perhaps one that seems to be ambiguously hinted at here in the comments, but may fundamentally account for any resistance to any ideas of "change".

ReplyDeleteSo long as data and process is rich, nearly any skill domain - such as playing chess, medicine, engineering and perhaps even weather forecasting - has consistently been shown to benefit from from well crafted yet often simple "robots" doing the the work while actually minimizing the "clinical opinions" of the human expert.

Anyone familiar with Danniel Kahneman's book "Thinking , Fast and Slow" will be aware of this well substantiated phenomena so i won't repeat t here. But it is worth highlighting that in nearly all cases and in the face of overwhelming empirical evidence that all of this is true, the experts were predictably hostile and often obstructive or flat out in denial.

The most famous examples were Kahneman being hired to assess a major investment firm and presumably provide tools for improvement. The assessment, which was statistically robust, concluded that their skill of predicting stock value was no better than "monkey's throwing darts" and that the best possible tool available for improvement was to simply invest in index funds and leave them alone for a decade or more.

This of course was received with all the enthusiasm of a fart in the board room and he was politely thanked for his opinion and never invited back for any follow up.

To paraphrase Bob Dylan. "There is no security like Job security but job security is no security at all"

Why does the NWS even bother with gridded forecasts anymore if the private sector is beating them? How many in the general public even use the NWS forecasts? I am fully supportive of the NWS owning the issuance of watches/warnings, but it seems wrong that they are competing with private industry on gridded forecasts, especially since they aren't as good. The NWS should update their systems to utilize AI/machine learning for the issuance of watches and warnings, communicate those threats to stakeholders and the public, and educate the public on weather safety. They could do all of this from a few regional offices and save the taxpayer millions.

ReplyDeleteExcellent and thought provoking Cliff. Agree with most points. But I would sure argue to move #6 much higher (if 1-6 is a ranking in your thinking), even into the top 3. Your last sentence, "I believe better communication can prevent this" is more critical than ever in the future you envision and to our science's service to the public, economy and country, led by our NWS, in the years ahead.

ReplyDeleteA follow to a comment above is to suggest that any program for a meteorology major include a required course in communication or introduction to behavioral psychology, preferably both and the required reading of the Kahneman's classic, "Thinking Fast and Slow".

Years ago during the "Fair Weather" report study, we found that the local NWS AFD was one of the most read NWS products. Why? Because users and the public knew it was prepared by a human. Improving and adding our "human touch" to the, at times, silly and confusing current wording of text forecasts, is where we should be investing more time and thought. The forecaster labors for hours over gridded model outputs he knows are hard to beat and then hits "Go" for a machine generated text he essentially has no control over. Is this the best we can do since the dawn of numerical forecasts 50+ years ago? The "perfect" forecast, not effectively communicated, which leads to a bad decision by the user/public is a "bad" forecast.

For decades, America has watched and listened to NHC directors Neil Frank, Bob Sheets, Max Mayfield and more ( Ken Graham upcoming) to help with their decision making. Not because they hit the "Go" button on a text work station, but they were and are meteorologists with the expertise and trust that the public relies on during life threatening and high risk weather.

The recent integration of the NWS Haz Symp program of including color coded headlines and warning simplification is sure a step forward in more effective communication during the life threatening events of Cliff's #6 item. But, as Cliff and many others believe, the future best service and time of our National Weather Service and operational forecasters is in better forecast Messaging, rather than computer grid Massaging. Look forward to Cliff's upcoming chapters on this important topic.

thank you, Cliff, for this article! I wholeheartedly agree with your overview of the current situation and of how to better utilize the skills that human forecasters possess (and that are needed). I’ve written blogs on the same subject, expressing similar thoughts.

ReplyDeleteIn a nutshell, the reality is that human forecasters are simply no longer able to improve upon automated systems for most weather parameters and timeframes; in fact, in their zeal and honest desire to do so, they are often *subtracting* value from those forecasts. Studies in Canada have demonstrated this. Plus, editing machine-produced guidance (whether gridded- or point-based) is a tedious, thankless job, and a waste of time for highly-trained forecasters.

In my view, forecast operations should be completely overhauled to almost completely automated the routine, daily forecast, and to allow forecasters to focus on…

• weather parameters that NWP systems still struggle with, and are important. Esp. wind

• timeframes where they can add value, esp. in the 0-12 hour period (nowcasting)

• significant and severe weather

• helping users to understand how to use forecast data, integrating weather with other factors (e.g. water levels, drought conditions, air quality), weather preparedness

And, as you write, forecasters need to be given time to…

• conduct studies, keep their knowledge up-to-date, participate in simulators for sig weather days

• review their performance, to see where and how their adjustments (intercessions) are working (or not)

NWS forecasts here in Bend, Oregon are surprisingly poor. There seem to be biases in both the temperature and precipitation forecasts, with temperatures often being higher than forecast and precipitation often being lower than forecast. In fact, I implicitly adjust the forecasts I see from the NWS to correct for these apparent biases.

ReplyDeleteI have speculated that these biases are because our NWS forecasters are based over 150 miles away in Pendelton, Oregon, where they just don't understand nuances of the local weather here in Bend. But shouldn't they recognize this bias over time and then start to correct it?

I usually look at the Weather Underground forecasts and compare them to those from the NWS, and the WU forecasts seem to be more accurate and demonstrate less bias. Is that because WU uses an "AI" system that learns from past systematic errors and corrects for them?

www.weather.com isn't the Weather Channel even though it appears so. It's owned by IBM-THE WEATHER COMPANY (formerly WSI), but the Weather Channel still has some sort of relationship that allows their branding to remain. It's all IBM now...Mark Nelsen

ReplyDeleteI worked at TWC for over 30 years and when the 'human over the loop" was implemented, it eventually led to me being laid off since they felt that less human intervention was needed. While from a statistical standpoint the automated forecast is better, this is heavily influenced by less active weather patterns, but when the weather is active and impactful often the automated forecasts are less than stellar and they need a forecaster to monitor and make adjustments, and if the staff is reduced this becomes more difficult.

ReplyDeleteAnother very disturbing trend in automation is the "when will it rain" concept that TWC and others have invested in, where a very detailed short range forecast is created from some of the high resolution models like the HRRR- the problem is that these forecasts are treated as 100% accurate- "the rain will begin at 12:43PM and end at 1:45PM", and of course that forecast will change numerous times over since it is based on an assumption that these models are infallible when of course they are anything but.

You know, growing up, I always wanted to be a weatherman. Or so I thought.

ReplyDeleteI enjoyed the maps, I enjoyed weather events, I enjoyed the stats, and records, and the extremes.

As I got older, and legitimately looked into it as a career path, the education required was overwhelming. I was struggling in school and knew there was no way I would be able to afford the level of education needed.

Fast forward 20 years and I find myself still following the weather, still obsessed with the details, and longing to experience a good storm chase, or a hurricane, or a blizzard.

One thing that happened, is with advances in technology, timelapse technology has been accessible. I’m a visual learner. I was always a pictures guy. Recording timelapses of the skies even during mundane weather events has helped me understand the mundane processes that effect the weather. Namely topography.

The PNW I feel is one of the hardest regions to forecast, for certain events. The proximity to the marine air over the ocean, the continental air to the east, and the mountain ranges, and gorges and gaps through the mountains, and even smaller features all play a part in how the air moves and interacts with the upper levels. This is very evident in watching things like the fog forming and dissipating, or the marine layer, or even those “popcorn” clouds that form on sunny days. When you REALLY observe the weather in a certain area over a period of time, you notice clouds form and behave a certain way under certain conditions, usually in relation to wind direction, and topography. To me, it has become almost an obsession.

I really feel that using this technique, not just for the “wow” factor, can be a very useful learning tool as to how the atmosphere behaves in a given location under certain conditions.

I really believe comparing what happened, to what was forecast, is a tool, and that using timelapse technology is a very good way to not only educate forecasters, but the people who program and edit the computer driven models.

The weather is often looked at as a large scale phenomenon, tgat moves across the land, when in reality... it’s merely atmospheric winds and conditions that move across the land. Even in a broad system like an atmospheric river event (as you’ve written about) local topography can have vast effects on the weather seen in a very localized spot. These are the details that forecasters should be focused on.

Let the models tell you what weather will be passing through, and utilize the forecasters (or a more detailed *local* program/algorithm/model) to pinpoint area of interest.

I’m often discouraged that a lack of formal education has kept me from pursuing my passion, and am tied of hearing “why don’t you be a weatherman” when someone asks me what I’m passionate about and I reply “the weather”.

I just want my foot in the door...

Much as I dislike the political loading of "Privatization", how does what Weather Underground, AccuWeather, etc. are doing to NWS differ from what SpaceX, Orbital, etc are doing to NASA. More for less $, and far less ossification.

ReplyDeleteThis comment has been removed by the author.

ReplyDelete